Some of the early modifications I made to the FlyCapture demo program were to reduce the frequency of the GDI-based UI thread, limit the size of the preview image, and force the preview to use only the easiest debayer algorithm (nearest-neighbor). This cut down the CPU utilization enough that the capture thread could buffer to RAM at full frame rate without getting bogged down by the UI thread. This was especially important on the Microsoft Surface Pro 2, my actual capture device, which has fewer cores to work with.

Adding the GPU debayer and color correction into the capture software lets me undo a lot of those restrictions. The GPU can run a nice debayer algorithm (this is the one I like a lot), do full color correction and sharpening, and render the preview to an arbitrarily large viewport. The CPU is no longer needed to do software debayer or GDI bitmap drawing. Its only responsibility is shuttling raw data to the GPU in the form of a texture. More on that later. This is the new, optimized architecture:

Camera capture and saving to the RAM buffer is unaffected. RAM buffer writing to disk is also unaffected. (Images are still written in RAW format, straight from the RAM buffer. GPU processing is for preview only.) I did simplify things a lot by eliminating all other modes of operation and making the RAM buffer truly circular, with one thread for filling it and one for emptying it. It's nice when you can delete 75% of your code and have the remaining bits still work just as well.

The UI thread has the new DirectX-based GPU interface. Its primary job now is to shuttle raw image data from the camera to the GPU. The mechanism for doing this is via a bound texture - a piece of memory representing a 2D image that both the CPU and the GPU have access to (not at the same time). This would normally be projected onto a 3D object but in this case it just gets rendered to a full-screen rectangle. The fastest way to get the data into a texture is to marshal it in with raw pointers, something C# allows you to do only within the context of the "unsafe" keyword...I wonder if they're trying to tell you something.

The textures usually directly represent a bitmap. So, most of the texture formats allow for three-color pixel formats such as R32G32B32A32 (32 bits of floating point each for Red, Green, Blue, and Alpha). Since the data from the camera represents raw Bayer-masked pixels, I have had to abuse the pixel formats a little. For 8-bit data, it's not too bad. I am using R8_UNorm format, which just takes an unsigned 8-bit value and normalizes it to the range 0.0f to 1.0f.

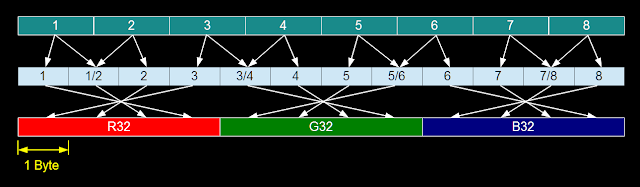

12-bit is significantly more complicated, since there are no 12- or 24-bit pixel formats into which one or two pixels can be stuffed cleanly. Instead, I'm using R32G32B32_UInt and Texture.Load() instead of Texture.Sample(). This allows direct bitwise unpacking of the 96-bit pixel data, which actually contains eight adjacent 12-bit pixels. And I do mean bitwise...the data goes through two layers of rearrangement on its way into the texture, each with its own quirks and endianness, so there's no clean way to sort it out without bitwise operations.

In order to accommodate both 8-bit and 12-bit data, I added an unpacking step that is just another pixel shader that converts the raw data into a common 16-bit single-color format before it goes into the debayer, color correction, and convolution shader passes just like in the RAW file viewer. The shader file is linked below for anyone who's interested.

The end result of all this is I get a cheaply-rendered high-quality preview in the capture program, up to full screen, which looks great on the Surface 2:

The end result of all this is I get a cheaply-rendered high-quality preview in the capture program, up to full screen, which looks great on the Surface 2:

Once the image is in the GPU, there's almost no end to the amount of fast processing that can be done with it. Shown in the video above is a feature I developed for the RAW viewer, saturation detection. Any pixel that is clipped in red, green, or blue value because of overexposure or over-correction gets its saturated color(s) inverted. In real time, this is useful for setting up the exposure. Edge detection for focus assist can also be done fairly easily with the convolution shader. The thread timing diagnostics show just how fast the UI thread is now. Adding a bit more to the shaders will be no problem, I think.

For now, here is the shader file that does 8-bit and 12-bit unpacking (12-bit is only valid for this camera...other cameras may have different bit-ordering!), as well as the debayer, color correction, and convolution shaders.

Shaders: debayercolor_preview.fx

Shaders: debayercolor_preview.fx

Hi Shane.

ReplyDeleteI would like to give you a big thank you for sharing such important information about technology for the "balance things"!!!

I am using your information and the OpenSource code others did based on the complementary filter <3

I am implementing an OpenSource firmware for the cheap "chinese" boards of the cheap generic Electric Unicycles: https://github.com/generic-electric-unicycle/documentation/wiki

Thank you for sharing <3