I've now got a complete mobile setup working for the

Grasshopper3 camera that I

started playing with last week, and I took it for a spin during the Freefly company barbecue. (It's Seattle, so barbecues are indoor events. And it's Freefly, so there are RC drift cars everywhere.)

Since the camera communicates to a Windows or Linux machine over USB 3.0, I went looking for small USB 3.0-capable devices to go with it. There are a few interesting options. My laptop, which I carry around 90% of the time anyway, is the default choice. It is technically portable, but it's not really something you could use like a handheld camcorder.

The smallest and least expensive device I found, thanks to

a tip in last post's comments, is the

ODROID-XU. At first I was skeptical that this small embedded Linux board could work with the camera, but there is actually a Point Grey

app note describing how to set it up. The fixed 2GB of RAM would be limiting for buffering at full frame rate. And there is no SATA, since the single USB3.0 interface is intended for fast hard drives. So it would be limited to recording short bursts or at low frame rates, I think. But for the price it may still be interesting to explore. I will have to become a Linux hacker some day.

The

Intel NUC, with a 4"x4" footprint, is another interesting choice if I want to turn it into a boxed camera, with up to 16GB of RAM and a spot for an SSD. The camera's drivers are known to work well on Intel chipsets, so this would be a good place to start. It would need a battery to go with it, but even so the resulting final package would be pretty compact and powerful. The only thing that's missing is an external monitor via HDMI out.

|

| The Grasshopper3 take better pictures at 150fps than my phone does stills. |

Other than a brief mention in a Point Grey app note, there wasn't any documentation that convinced me the Surface Pro 2 would work, but it has an Intel i5-4300 series, 8GB of RAM, and USB 3.0, so it seemed likely. And it did work, although at first not quite as well as my laptop (which is an i7-3740QM with 16GB of RAM). Using the FlyCapture2 Viewer, I could reliably record 120fps on the laptop, and sometimes if I kill all the background processes and the wind is blowing in the right direction, 150fps. On the Surface, those two numbers were more like 90fps and 120fps. Understandable, if the limitation really is processing power.

I also could not install the Point Grey USB 3.0 driver on the Surface. I tried every trick I know for getting third-party drivers to install in Windows: disabled driver signing (even though they are signed drivers), modified the .INF to trick Windows into accepting that it was in fact a USB 3.0 driver, turning off Secure Boot and UEFI mode, forcing the issue by uninstalling the old driver. No matter what, Windows 8.1 would not let me change drivers. I read on that internet thing that Windows 8 has its own integrated USB 3.0 driver, even though it still says Intel in driver name. Anyway, after a day of cursing at Windows 8 refusing to let me do a simple thing, I gave up on that approach and started looking at software.

The FlyCapture2 Viewer is a convenient GUI for streaming and saving images, but it definitely has some weird quirks. It tries to display images on screen at full frame rate, which is silly at 150fps. Most monitors can't keep up with that, and it's using processing power to convert the image to a GDI bitmap and draw graphics. The program also doesn't allow pure RAM buffering. It always tries to convert and save images to disk, filling the RAM buffer only if it is unable to do so fast enough. At 150fps, this leads to an interesting memory and processor usage waveform:

|

| Discontinuous-mode RAM converter. |

During the up slope of the memory usage plot, the program is creating a FIFO buffer in RAM and simultaneously pulling images out, converting them to their final still or video format, and writing them to disk. During the down slope, recording has stopped and the program finishes converting and saving the buffer. You can also see from the processor usage that even just streaming and displaying images without recording (when the RAM slope is zero) takes up a lot of processor time.

The difference between the up and down slopes is the reason why there needs to be a buffer. Hard disk speed can't keep up with the raw image data. An SSD like the one on the Surface Pro 2 has more of a chance, but it still can't record 1:1 at 150fps. It can, however, operate continuously at 30fps and possibly faster with some tweaking.

But to achieve actual maximum frame rate (USB 3.0 bus or sensor limited), I wanted to be able to 1) drop display rate down to 30fps and 2) only buffer into RAM, without trying to convert and save images at the same time. This is how high-speed cameras I've used in the past have worked. It means you get a limited record time based on available memory, but it's much easier on the processor. Converting and saving is deferred until after recording has finished. You could also record into a circular RAM buffer and use a post trigger after something exciting happens. Unfortunately, as far as I could tell, the stock FlyCapture2 Viewer program doesn't have these options.

The FlyCapture2 SDK, though, is extensive and has tons of example code. I dug around for a while and found the SimpleGUI example project was the easiest to work with. It's a Visual C# .NET project, a language I haven't used before but since I know C and VB.NET, it was easy enough to pick up. The project has only an image viewer and access to the standard camera control dialog, no capacity to buffer or save. So that part I have been adding myself. It's a work-in-progress still, so I won't post any source yet, but you can see the interface on this contraption:

|

| Part of the motivation for choosing the Surface was so I could make the most absurd Mōvi monitor ever. |

To the SimpleGUI I have just added a field for frame buffer size, a Record button, and a Save Buffer button. In the background, I have created an array of images that is dynamically allocated space in RAM as it gets filled up with raw images from the camera. I also modified the display code to only run at a fraction of the camera frame rate. (The code is well-written and places display and image capture on different threads, but I still think lowering the display rate helps.)

Once the buffered record is finished, Save Buffer starts the processor-intensive work of converting the image to its final format (including doing color processing and compression). It writes the images to a folder and clears out the RAM buffer as it goes. With the Surface's SSD, the write process is relatively quick. Not quite 150fps quick, but not far off either. So you record for 10-20 seconds, then save for a bit longer than that. Of course, you can still record continuously at lower frame rates using the normal FlyCapture2 Viewer. But this allows even the Surface to hit maximum frame rate.

|

| All hail USB 3.0. |

|

| I just have to worry about cracking the screen now. |

There are still a number of things I want to add to the program. I tested the circular buffer with post-trigger idea but couldn't get it working quite the way I wanted yet. I think that is achievable, though, and would make capturing unpredictable events much easier. I also want to attempt to write my own simultaneous buffering and converting/saving code to see if it can be any faster than the stock Viewer. I doubt it will but it's worth a try. Maybe saving raw images without trying to convert formats or do color processing is possible at faster rates. And there are some user interface functions to improve on. But in general I'm happy with the performance of the modified SimpleGUI program.

And I'm happy with the Grasshopper3 + Surface Pro 2 combo in general. They work quite nicely together, since the Surface combines the functions of monitor and recorder into one relatively compact device. The real enabler here is USB 3.0, though. It's hard to even imagine the transfer speeds at work. At 350MB/s, at any given point in time there are 10 bits, more than an entire pixel, contained in the two feet of USB 3.0 cable going from the camera to the Surface.

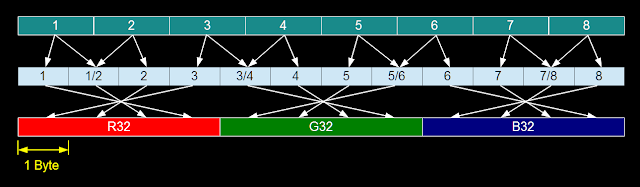

The sheer amount of data being generated is mind-boggling. For maximum frame rate, the RAM buffer must save raw images, which are 8 bits per pixel at 1920x1200 resolution. Each pixel has a color defined by the

Bayer mask. (Higher bit depths and more advanced image processing modes are available at lower frame rates.) On the Surface, this means about 18 seconds of 150fps buffering, at most.

There are a variety of options available for color processing the raw image, and after color processing it can be saved as a standard 24-bit bitmap, meaning 8 bits of red, green, and blue for each pixel. In this format, each frame is a 6.6MB file. This fills up the 256GB SSD after just four minutes of video... So a better option might be to save the frames as high-quality JPEGs, which seems to offer about a 10:1 compression. Still, getting frame data off the Surface and onto my laptop for editing seemed like it would be a challenge.

|

| Enter the RAGE. |

USB 3.0 comes to the rescue here as well, though. There exist many extremely fast USB 3.0 thumb drives now. This 64GB one has a write speed of 50MB/s and a read speed nearing 200MB/s (almost as fast as the camera streams data). And it's not even nearly the fastest one available. The read speed is so fast that it's actually way better for me to just edit off of this drive than transfer anything to my laptop's hard drive.

|

| Solid- I mean, Lightworks. |

Lightworks seems to handle .bmp or .jpg frame folders nicely, importing continuously-numbered image sequences without a hassle. If they're on a fast enough disk, previewing them is no problem either. So I can just leave the source folders on the thumb drive - a really nice workflow, actually. When the editing is all done, I can archive the entire drive and/or just wipe it and start again.

While I was grabbing the USB 3.0 thumb drive, I also found this awesome thing:

It's a

ReTrak USB 3.0 cable, which is absolutely awesome for Mōvi use - even better than the relatively flexible $5 eBay cable I bought to replace the suspension bridge cable that Point Grey sells. It's extremely thin and flexible, so as to impart minimum force onto the gimbal's stabilized payload. I'm not sure the shielding is sufficient for some of the things I plan to do with it, though, so I'll keep the other cables around just in case...

That's it for now. Here's some water and watermelon: