This post continues an exploration into the inner workings of PCIe. The previous post presented a top-level view of the PCIe Controller as a memory bus extension, with discussion of the various overheads associated with wrapping memory transfers into serial data packets. In this post, I want go to the other extreme and look at one of the low-level logic mechanisms that PCIe depends on for reliable data transfer: the parallel Linear-Feedback Shift Register (LFSR). This mechanism efficiently introduces randomness required to ensure DC-balanced serial data, and to validate Transaction Layer Packets (TLPs) with a Cyclic Redundancy Check (CRC).

PCIe 3.0 Scrambler

PCIe signals are driven across AC-coupled differential pairs to increase immunity to noise. The transmitter and receiver may be on different boards, far apart from each other, with significant high-frequency ground offset between them. Adding series capacitors to the differential signal provides low-voltage level shifting capability to deal with this. But, this only works if the data coming across the link is DC-balanced over a data interval much shorter than the time constant formed by the AC coupling capacitor and termination resistor, which is typically 10⁴ to 10⁵ UI.

PCIe 1.0 and 2.0 use 8b/10b encoding to enforce DC balance. This encoding tracks the running disparity of the serial data stream and modifies 10b symbols (representing 8b data) to keep it in balance. This is also the encoding used in USB all the way up to USB 3.x Gen 1 (5Gbps), which is the same speed as PCIe 2.0. It's simple and deterministic, but it has a poor serial encoding efficiency of only 80% (8/10).

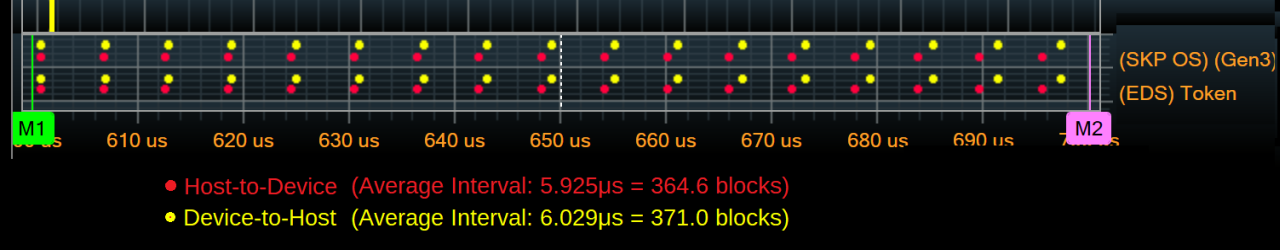

By contrast, PCIe 3.0 through PCIe 5.0 use 128b/130b encoding, where two sync bits are prepended to 128b data payloads to form 130b blocks. As discussed in the previous post, this has a much better serial encoding efficiency of 98.5% (128/130). However, the two sync bits are not sufficient to control running disparity with a 128b data payload. Instead, the data is sent through a scrambler, a Pseudo-Random Number Generator (PRNG) that remaps bits in a way that both the transmitter and receiver understand. The output stream is statistically DC-balanced for all real data.

The PCIe implementation of the PRNG for scrambling is as a Linear-Feedback Shift Register (LFSR). In the case of PCIe 3.0, the canonical implementation is a 23-bit shift register with strategically-placed XORs between some bits to instigate pseudo-randomness. The output of the shift register is then XORed with each data bit to generate the scrambled output. Each lane gets its own LFSR scrambler seeded with a different value.

This is simple logic, but it would need to run at 8GHz to be implemented in single-bit fashion like this. That's not really practical even in dedicated silicon, and is completely impossible using FPGA sequential logic. However, it's possible to parallelize the LFSR to any data width pretty easily. The key is in the name: the operation is linear, so the contributions of each bit of input data and the initial LFSR can be superimposed to generate each bit of output data and the final LFSR. This method of parallelizing the LFSR is covered very well at OutputLogic.com, with utilities to generate Verilog implementations of any LFSR and data width. I will only briefly describe the procedure here.

Using for example the 23-bit LFSR and a 32-bit data path (common for each PCIe 3.0 lane with a 250MHz PHY clock), there are a total of 23 + 32 = 55 bits that can contribute to the final LFSR and output data. Set each of those bits to one, and all other bits to zero, then run the LFSR forward by 32 steps, and record the contribution of each input bit to the output data and final LFSR. This creates a big table of bit contributions:

The full parallel operation is just the sum (in mod 2, so XOR) of contributions from each bit of input data and the initial LFSR. Each bit of the output data and final LFSR is the XOR combination of a specific set of input bits, with at most 55 contributing bits. On a Xilinx Ultrascale+ FPGA, wide XORs like this are easy to build using nested six-input LUTs. With two levels, you get 6² = 36 inputs. With three levels, 6³ = 216 inputs. Each level has a propagation time on the order of 1ns, so even nested three deep it's capable of running at 250MHz.

Link CRC

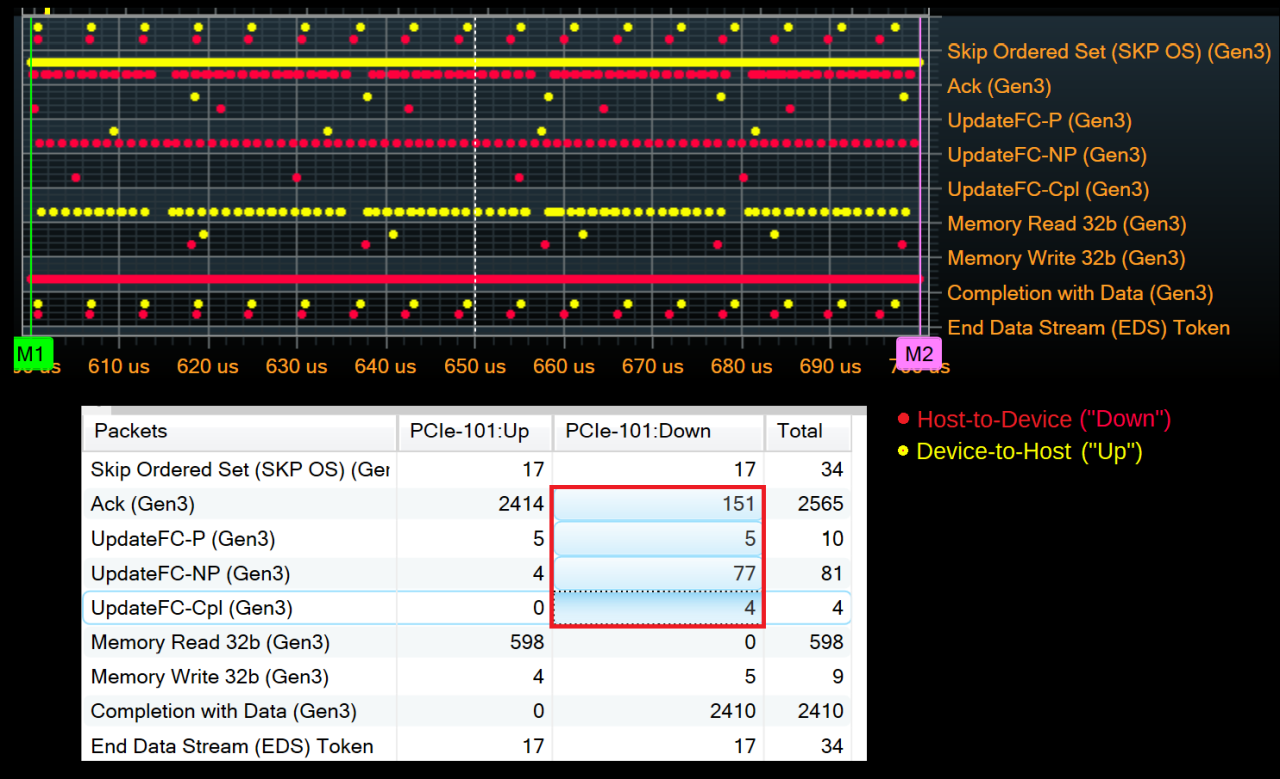

Another use for the parallel LFSR is in the generation and checking of the Link CRC, a 32-bit value used for error detection in TLPs. The LCRC acts like a signature for the bits in the TLP and the value received must match the value calculated by the receiver, or the TLP is rejected. The LCRC mechanism uses a 32-bit LFSR (with XOR positions described by the standard CRC-32 polynomial 0x04C11DB7), seeded to 0xFFFFFFFF at the start of each TLP. At the end of the TLP, the 32-bit LFSR value is mapped to the packet's LCRC through some additional bit manipulation.

The LCRC operation can be parallelized in the same way as the Scrambler. The main differences are that the data is unmodified by the LCRC operation and that the data does contribute to the XOR sum of the next LFSR value. (This is what drives the LCRC to a unique value for each TLP.) In table form, this just changes which quadrants have XOR contributions:

Although there are fewer rows to handle, there are now 128 + 32 = 160 columns. The LCRC is calculated on packets before they are striped across all lanes. So for a PCIe 3.0 x4 link, instead of four 32-bit data paths as in the Scrambler, there is just one 128b data path operating at 250MHz. Any of these bits and any of the 32 bits of the previous clock cycle's LFSR might contribute to the XOR for each bit of the new LFSR. This isn't a problem, though, since three levels of LUT6 can handle up to 216 XOR inputs at 250MHz, as described above.

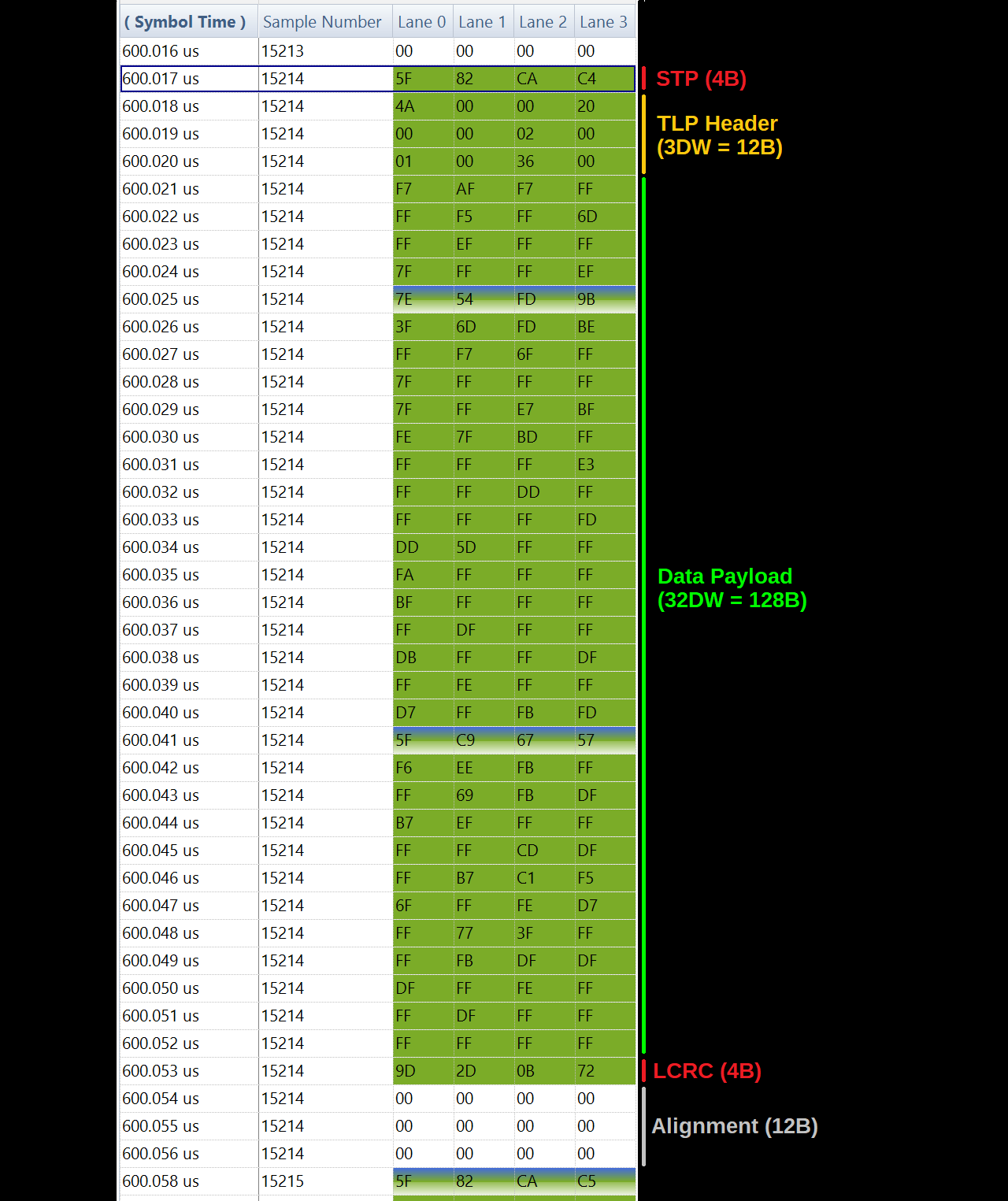

Where things do get a little complicated is in data alignment. TLP lengths are multiples of one Double Word (DW), or 32b. So, even without considering framing, 3/4 of the possible lengths would not fit evenly into 128b data beats. Each TLP is also prepended with a 32-bit framing token (STP), the latter half of which is fed into the LCRC computation as well. So in fact all cases will involve a partial data beat.

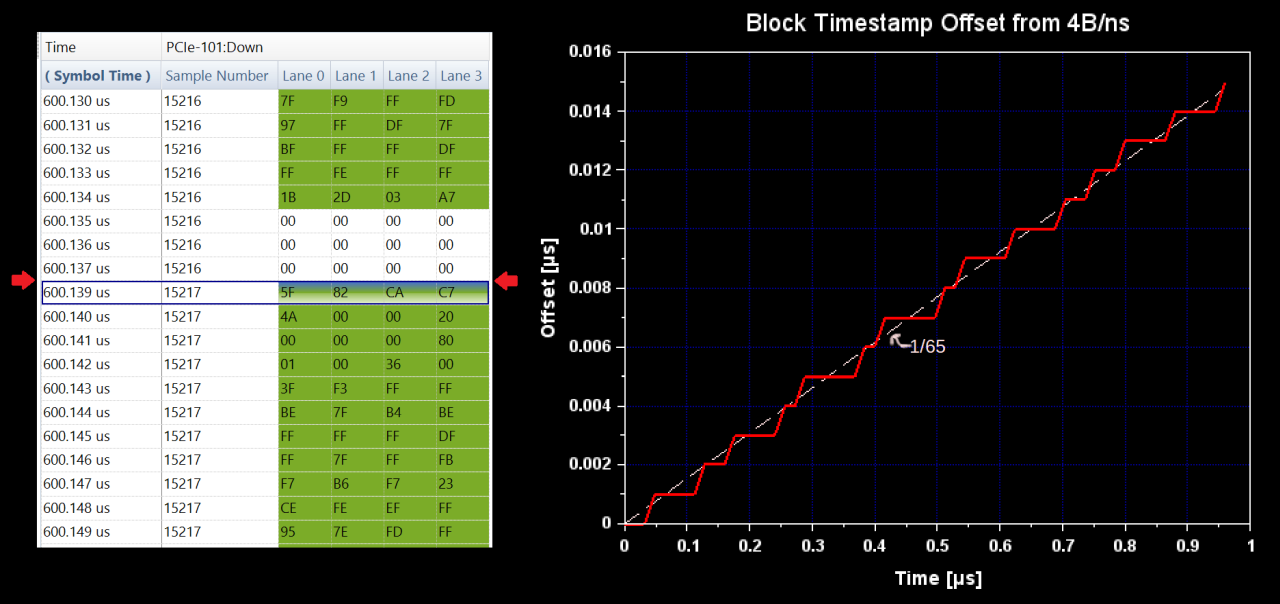

To handle this with a 128b parallel LFSR, the LCRC mechanism must get clever. Based on the length of the packet (which is known once the STP is parsed), the 128b data window can be shifted such that the last data beat will be aligned with the end of the packet. This ensures that the final LFSR value can be used directly to generate the LCRC. Then, the first 128b data beat is padded with zeros up to the middle of the STP token, where the LCRC computation begins. (In the case of a 3DW header with no data, the first and last data beat are the same.) This creates four possible alignment cases that repeat based on the length of the TLP:

Depending on the alignment case, the LFSR is seeded with a different value that accounts for the extra {16, 48, 80, 112} zeros padded onto the first data beat. These seed values are derived by seeding the reference single-bit implementation of the LFSR with 0xFFFFFFFF, then running it backwards for {16, 48, 80, 112} steps with zero data bits. With these seeds, the 128b parallel LFSR can be run on the zero-padded data and give the same final result as the single-bit implementation on the original data.

An interesting follow-up issue is how to handle back-to-back TLPs. Padding the first LCRC beat with zeros potentially means more than a 1:1 bit rate for the LCRC engine compared to the packet data, if there is no idle time between packets. An easy workaround could be to run two LCRC engines that take turns processing packets, although this means twice the logic area. The details are likely to vary in every implementation, so it's not something I will get into here.

Conclusion

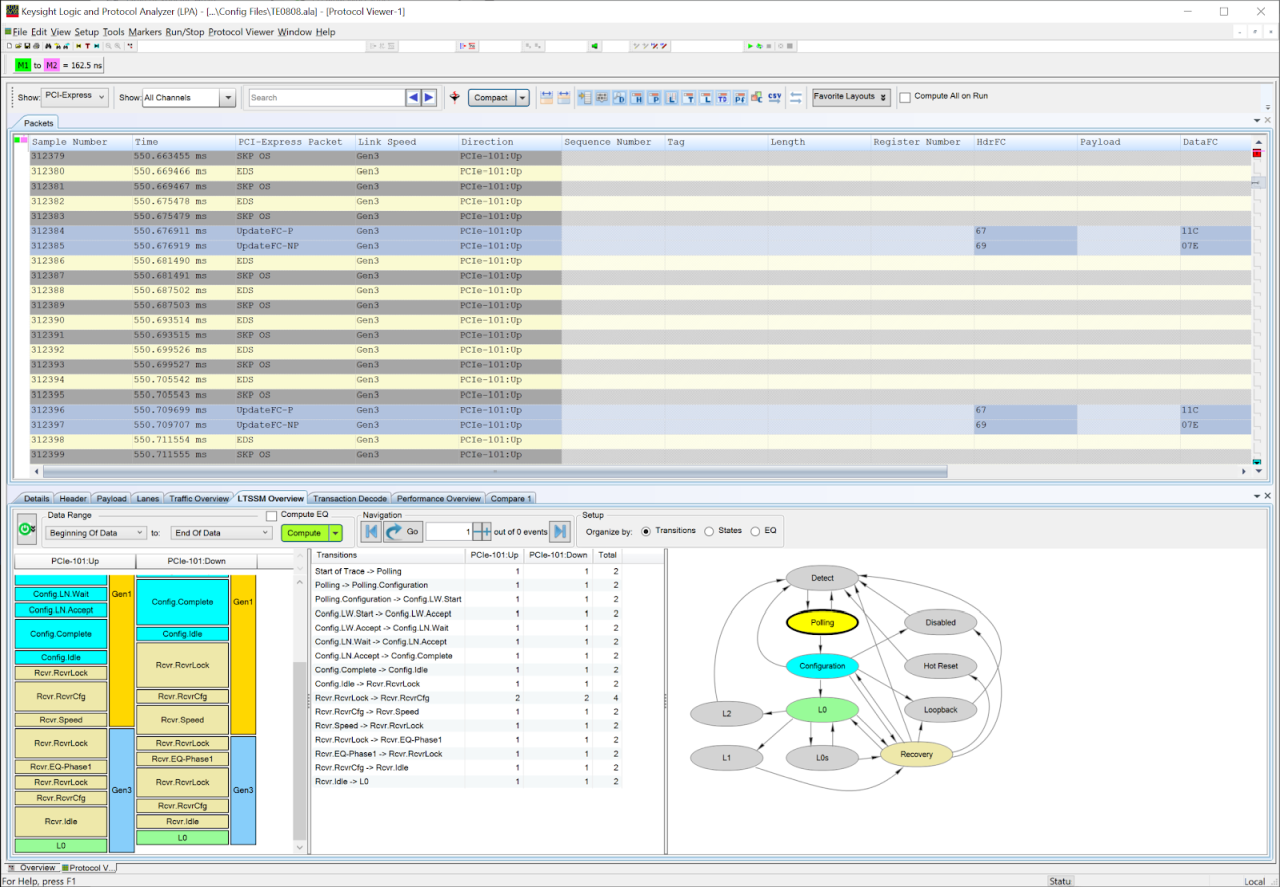

The last couple of posts were setup and background for PCIe in general. This one was more of a microscopic view of a particular logic mechanism key to several aspects of PCIe, and how it can be implemented efficiently on modern FPGAs. There are many such interesting logic puzzles to solve in gateware implementations of PCIe, and I wanted to give just one example at the lowest level I understand. I may cover other logic-level tricks in future posts, but first I think it will be more interesting to introduce what might be the scariest part of PCIe: the Link Training and Status State Machine (LTSSM). To be continued...